6. Do we have the right data? / AI Product Management

Part 6 of the AI Product Management series of blogs

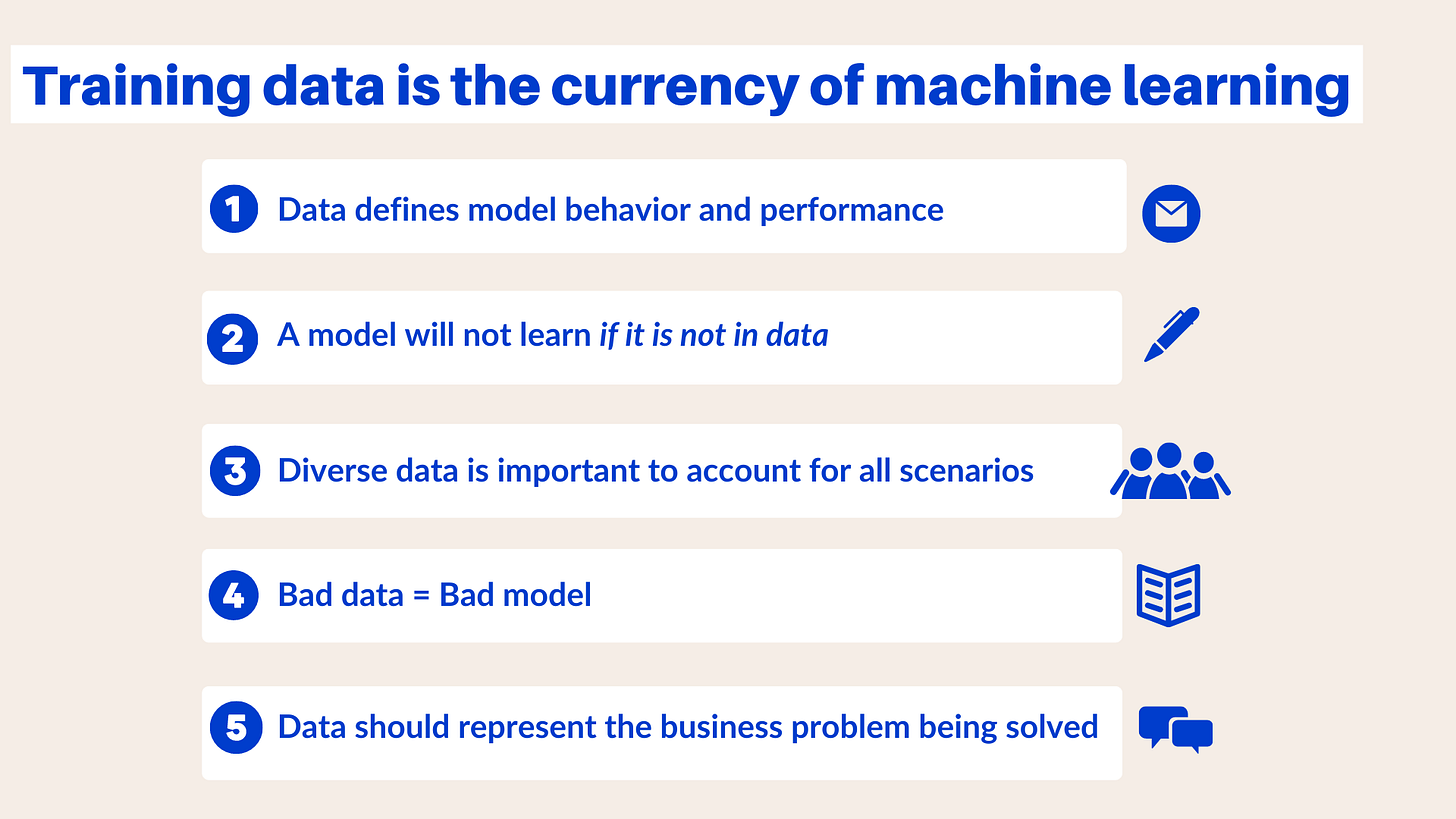

In Machine Learning, the data is key to the performance of your model and hence the outcomes of your product. if you want a model to learn a specific concept, it must be clearly represented in the data for the machine learning model to learn.

For example, if you want your model to detect anomalies in heavy mining machinery noises in audio, in order to detect any machine faults, you must provide the model with examples of all the types of noises you would like it to track in real time. However, if you are training the model using noises simulated by humans or other audio equipment which may sound similar to the mining machinery, there is a high chance that your model will fail/have errors.

Characteristics of good training data include:

1. Data Size

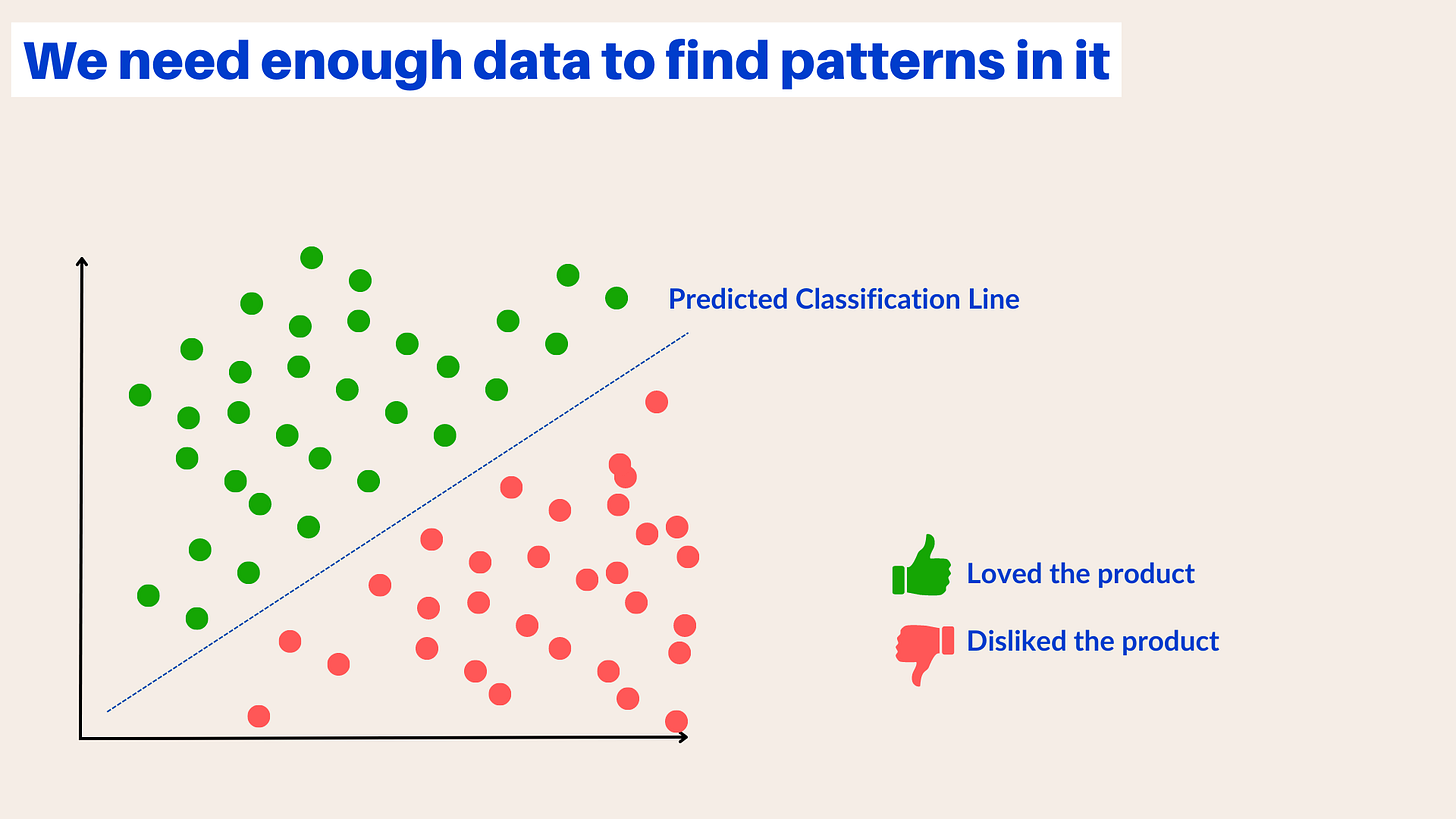

We need enough data in our training set to find patterns within the data.

When using a deep learning algorithm, data size becomes even more critical compared to traditional machine learning techniques. Deep learning, using neural networks, require many examples of each possible category to learn how to differentiate between different classes of data and identify general patterns. Insufficient data points or uneven distribution among the categories that you want to distinguish may lead to significant sampling bias in your predictions, causing your model to classify all data into one class.

For example, you are building a sentiment analysis model for customer reviews or tweets. You need to have sufficient data to predict the distinction between positive and negative sentiments.

2. Diverse Data

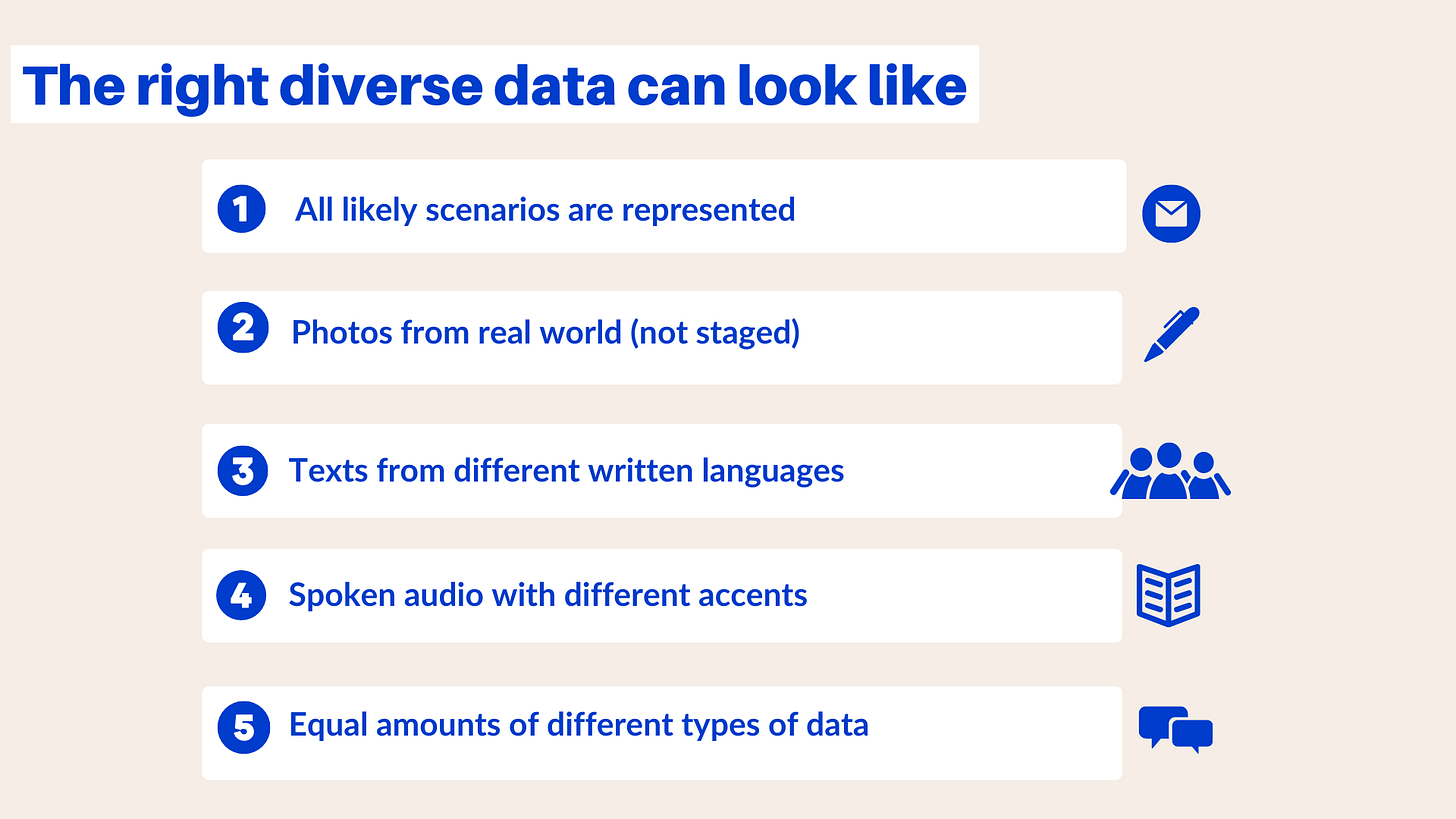

To ensure the model performs well in its intended usage, it's important to use diverse data. This means understanding all potential scenarios the model is likely to encounter and accommodating for those scenarios in the training data.

For example: if the model only encounters cats in the snow, then it is very unlikely it will identify a cat pictured inside the home.

*I may or may not have included these pictures as an example for its cuteness :)

Diverse data is also important to mitigate the bias within your model and product, which is a topic which we will discuss in detail in a later post.

3. Provenance of the data (Where Did the Data Come From?)

It is important to consider the provenance (source) of your data. It is often the case in large enterprises that data is scattered throughout databases in different departments, without any documentation about its origin or how it was obtained. As data travels from its point of collection to the database where you encounter it, it is likely that it has been altered or manipulated in some significant way. If you make assumptions about how the data was obtained, you may end up creating a model that is useless.

Suppose you are building a model to categorize and optimize the rental application process. You find rental applications within the company's lease management software and can easily annotate (and categorize) them. At first, you may think this process is straightforward.

However, what if this particular agency has a different process for high-value rentals? For instance, if the monthly rent exceeds a set amount, the agency provides a concierge service with dedicated personnel to both the landlord and renter and these applications are currently in individual agents’ laptops (trust me, it happens!) and skip the system entirely. If you had not delved deeper into the process, you might have missed these high-value transactions, which have a huge business impact. This is where domain knowledge of the business processes plays a crucial role in developing a robust product.

4. Data Quality

A Gartner Data Quality Market Survey estimated that data quality issues impact businesses to the tune of approximately $15 million lost in 2017.

Garbage in, Garbage out

"In computer science, garbage in, garbage out (GIGO) describes the concept that flawed, or nonsense input data produces nonsense output or 'garbage'."

The quality of data depends on both the raw data and the accuracy of its annotations. To ensure high-quality data, it is important to have quality control processes in place to verify that the people annotating the data do so completely and correctly. Otherwise, the accuracy of your model will suffer.

Suppose you are developing a healthcare product to help doctors read X-rays more easily by helping them detect infections/cell overgrowth within the lungs. Your team is tasked with annotating training data which are pictures of sample X-rays. When annotating images by drawing boxes around the white cloudiness (which can manifest as infections) in the picture, it is important to draw the box accurately. If the box is too big, it will include other things that are not infections. This will make it more difficult for the model to learn what an infection looks like, as it has been trained to recognize bones or others as infections.

Similarly, if data annotation consists of transcribing voice messages, incorrect transcription—such as excluding or including words not present or getting words wrong—will result in a poor model. It is not the model's fault, but rather the result of being trained on incorrect data.

5. Data Security

As you gather data for your project, you'll likely need to address how to handle security. When using personally identifiable, medical, or government-controlled data, your usage may be restricted by legal or contractual obligations. You'll need to factor in controlling access to these datasets or masking the personal information into your data acquisition strategy.

Other datasets, while not inherently sensitive, may still require secure handling depending on who has the data and why. For instance, YouTube channel follows may not be individually sensitive, but they provide valuable business intelligence when analyzed in aggregate. Even if the data is anonymized, it could be catastrophic for YouTube if it falls into the wrong hands because YouTube may use this data to build its recommendation engine algorithms and run its ads.

The US Department of Veterans Affairs has implemented the REACH VET program to reduce the number of veterans who commit suicide by using AI to predict when a veteran is at risk. The program's team created a model based on medical records, VA service usage, and medication information to determine the suicide risk of each tracked veteran. As the model includes sensitive medical information, it is crucial that these data remain secure. Therefore, the data collection must also comply with HIPAA regulations.

When developing your data pipeline, you must consider all these factors - data availability, diversity, provenance, quality, and security. Every step of the pipeline should be consistent, repeatable, and accurate. A well-thought-out, well-documented, repeatable pipeline will greatly contribute to the long-term success of the machine learning model in your product.

Many people new to AI think that building the model is the most challenging part. In reality, however, preparing the data and building the pipeline often requires more time, resources, energy, and skillsets. Without a repeatable and scalable pipeline, even the best-designed model cannot be used in your product for a long enough time to be useful.

Thanks for reading.

In the next post, we will discuss about data fit and annotation in more detail.